"AI-pocalypse" & the Coming Epidemic of Mass Elder Financial Abuse

I listened to a recent podcast which made me realize we are likely on the cusp of an epidemic of elder financial abuse the likes of which we can't imagine

Gonna plug these guys because the content is generally fantastic.

The format of the podcast has Erik Townsend (former tech professional turned hedge fund manager and financial podcaster) doing weekly interviews with macro / finance greats like Luke Gromen, the hugely popular Lyn Alden, and the mercurial former manager of the hugely successful hedge fund Eclectica Hugh Hendry, typically preceded by a review of major financial market events of the week by his co-host, Patrick Ceresna.

I recently listened to a Macrovoices episode (“Episode #406: Matt Barrie: AI-pocalypse Now”) which absolutely knocked my socks off - and got me honestly kind of scared about what’s coming in the future when it comes specifically to the area of financial scams that target older people - i.e., what’s commonly referred to as elder financial abuse.'

In the MacroVoices “AI-Pocalypse” episode, Erik and his guest cover the good, but also the potentially-dystopian-bad that AI technology may be bringing to us - much, much sooner than we think.

Matt Barrie is the CEO of the website FreeLancer.com (which boasts a userbase of around 70 million freelance gig workers). In his interview Erik Townshend, talked about a number of ways AI will change the world of work, the world of creativity, of medicine, and of industry.

A quote from the interview that stuck with me, and is very relevant to his website is that he says that with AI tools - “an average copywriter can now write exceptional level copy in any field, an average designer can become an exceptional designer, and an average videographer can become an exceptional videographer.”

While this is excellent for people in, say, non-english-speaking, less-industrialized countries, this really poses a serious threat to the US middle class in ways that might rival the “offshoring” phenomenon that eviscerated the middle class from the 80s & 90s on.

Although the MacroVoices guys and their guest, Matt Barrie didn’t directly talk about AI and it’s potential applications for elder abuse - the talk itself got me thinking about, and very worried about this issue, and inspired this article.

Very Brief History of AI

“Artificial Intelligence” has gone retail, with the ironically-named “OpenAi” (w/ it’s somewhat scandalous midstream switch to for-profit from it’s formerly nonprofit status, and it’s issues with data transparency) & it’s 1 product ChatGPT and Elon Musk’s upstart, ‘anti-woke’ competitor, Grok exploding into public consciousness.

As one of my favorite Twitter / X follows, Mike Benz of the Foundation for Freedom Online notes - AI technology has been around for many years. In 1997, the chess engine “Deep Blue” famously beat chess grandmaster Garry Kasparov, which was considered a milestone in the development of the technology.

Since then, there’s been growing interest in the technology, with the Pentagon’s speculative research arm, DARPA, committing and lavishing billions from 2018 on for their “AI Next” campaign - and likely many billions more in ‘off the books’ funding for AI projects to, among other things stay ahead of the growing threat seen from CCP China, as well as supercharge the NATO / US Intelligence Community “Blob’s” growing interest in directing the censorship of growing anti-globalist “populist” political movements around the world (e.g., Trump, Brexit, etc.) that threaten the status quo.

To Understand the Potential of AI - Best Is to Experience It

The greatest part of the #406 MacroVoices episode is how it starts - with the slightly-stodgy (but always super knowledgeable, incredibly-talented host!) Erik Townshend introducing the podcast.

Except it isn’t Erik. As he talks and introduces the podcast, he announces that it isn’t actually him speaking - it’s an AI version of him, generated from a speech sample. I’ll be totally honest - although Mr. Townshend at one point comments that it doesn’t sound quite like him, I was only half-listening during the introduction and I had zero clue I was listening to the ersatz host. When I finally learned it wasn’t him actually speaking about three minutes into the intro - I almost fell out of my chair and then listened intently to the rest of the episode. Listen to the podcast to yourself, including the intro, here:

Unless you live most of the days of the week under a rock, you’ve likely at least tried out the popular LLM chatbot, ChatGPT - and if not, I recommend you try it, so you can see what interacting with a truly conversational, LLM AI chatbot is like.

I also personally recommend, and currently paid for the premium version of Perplexity.AI, which makes use of the current LLM model behind ChatGPT, as well as several others - and frankly was such an incredible game-changer when it comes to doing internet research I simply had to have it - it blew Google so completely out of the water nothing was left.

For the best and most mind-blowing, and slightly-scary experience, I recommend you hop over to HeyGen.com, upload a two-minute sample video of yourself speaking, and the AI engine then creates essentially a synthetic version of you, which you can literally program to say whatever you like. Demo video below:

Let’s Not Be “Luddites” Here

Looking at the very wide sweep of history, the societal impact of AI technology, along with our collective anxieties and fears about it, is tracing a very familiar and well-worn pattern that reminds me a bit of the anxieties and fears channelled famously by the original, 18-century ‘Luddites.’

The Luddites were a movement of textile workers who famously protested their displacement and ill-treatment by the introduction of mechanization to the textile industry, such as via the stocking frame, which they frequently targeted in destructive protests.

The term luddite, then, has taken on the meaning of a person who is against often-innovative technologies because of the disruptive, destructive aspects of it, with an emphasis on how it destroys jobs and livelihoods in it’s wake.

Obviously, of course, luddite is a pejorative term in todays society, mostly, because we mostly recognize that with any number of disruptive technologies (like the wheel, moveable type, the telephone, etc.), society has largely adapted and seen net benefits.

This is largely the lens I operate from - I am a technology positivist, I tend to believe that history has shown over and over that technological leaps, like the wheel, the automobile, moveable type, the internet - while they are often extremely chaotic and destablizing for society to adapt to these technologies (for example, the internet has destroyed classified ads and the ‘yellow pages,’ & is in the chaotic process of killing traditional media), I think society, in the end, benefits greatly from technological advances like these.

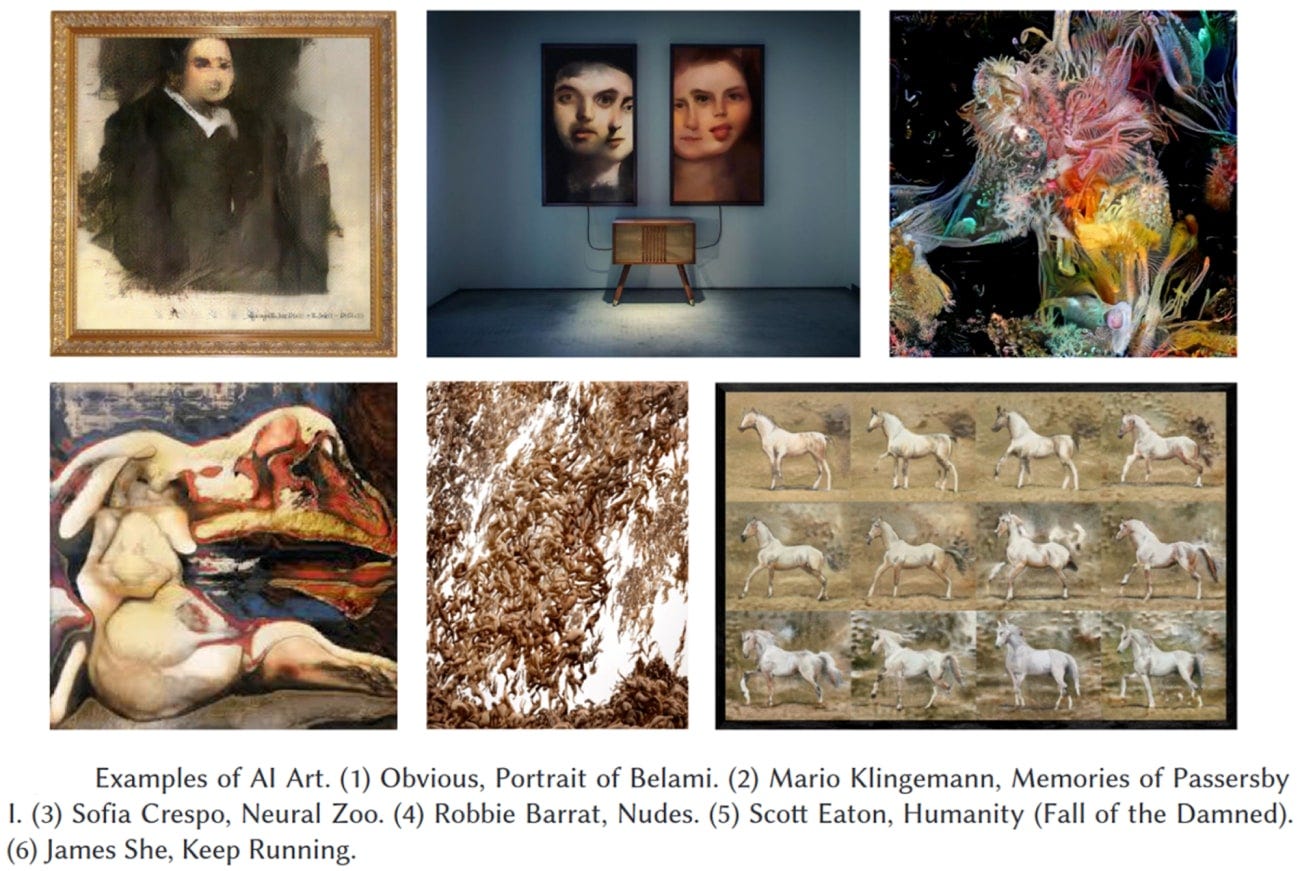

AI, ‘Content Creation’ and Hollywood

There’s an almost staggering potential of areas that AI technology and LLM models are worming it’s way into so many industries, like healthcare, security, high tech, finance, and etc (as well as disturbing areas like government censorship). I will not cover them all here.

Wanted to cover two areas, though - Hollywood… and scams.

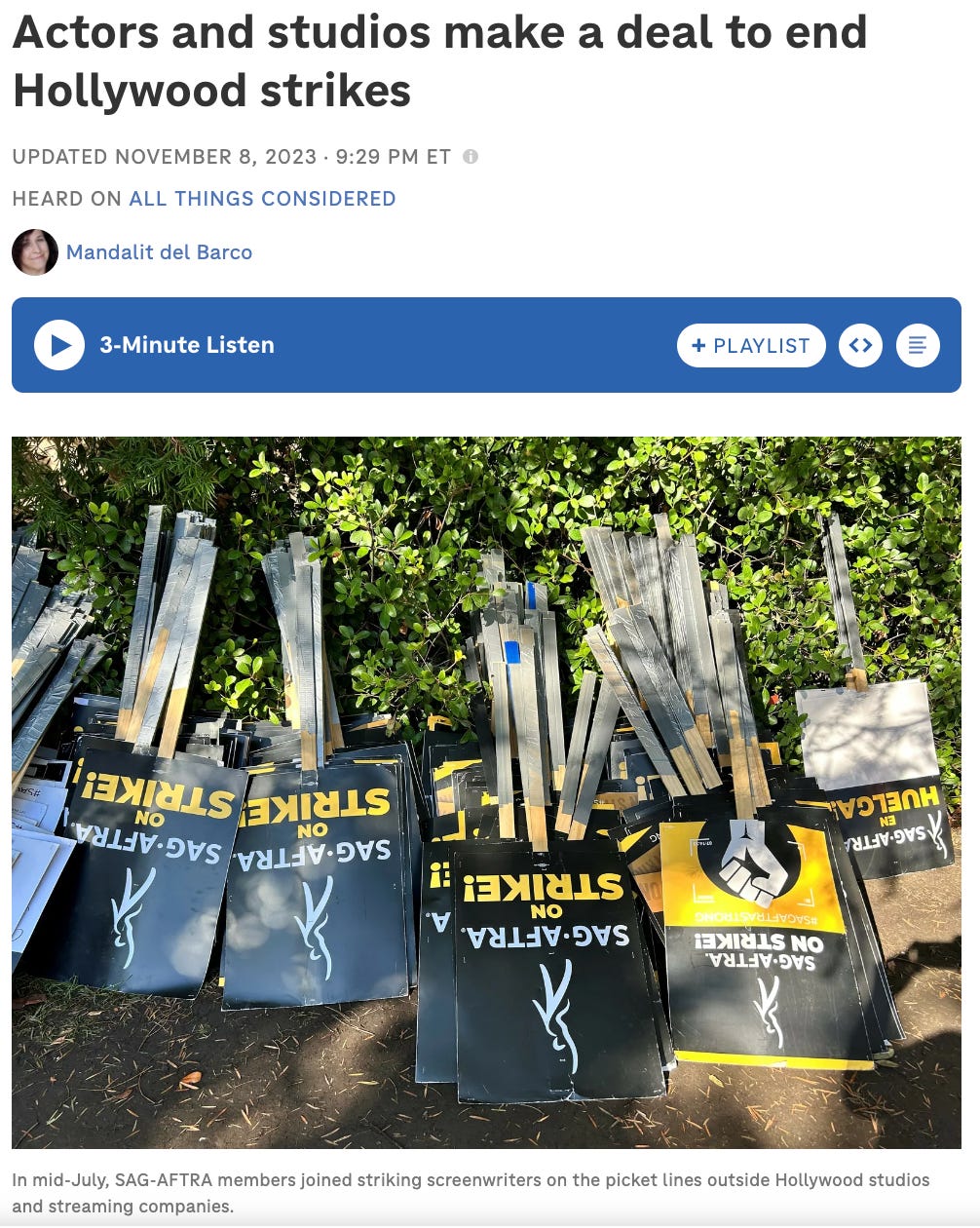

Does everyone recall the recently-concluded Hollywood writer and actor’s guild strike?

Although writer’s & actor’s guild strikes in Hollywood aren’t exactly uncommon, the fact that both the Hollywood actors' union (Screen Actors Guild-American Federation of Television and Radio Artists, SAG-AFTRA) and the writers' union (Writers Guild of America, WGA) engaged in simultaneous strike activity - the first time since 1960 - was highly unusual.

Although much of the dispute was over familiar issues like pay, residuals, and working hours - a major concern of both the writer’s and actor’s guilds was over the issue of AI content, and the growing concern that the day could come whereby AI could put writers - and even actors - out of jobs.

Their concern is well-placed. According to Matt Barrie - the day will come, likely within the next 12 months that, with proper computing power, people will be able to do a text-based prompt and create feature-length, live action video.

Are you a huge Joss Whedon fan and still are bummed they never made another season of Firefly? No problem, in a year or two you should be able to call one up, made to order! Or in my case - did the Chris Nolan “Dark Knight” Batman trilogy leave you pathologically hungry for more?

OK, because soon enough, AI will be able to deliver convincing and gripping versions, with all of your favorite actors included!

But, I could talk about AI content creation and Hollywood all afternoon.

What got me really excited - and worried - was the potential for AI technology to literally supercharge elder financial abuse.

Elder Financial Abuse and Technology

Elder financial abuse is seeing an unprecedented boom in the 2020s. According to the Motley Fool, there were 92,371 victims of elder fraud in 2021, with losses increasing by 391.9% from $343 million in 2017 to $1.685 billion in 2021

There’s many reasons why older adults are often considered prime targets of financial abuse.

First - on average, they have the most amassed wealth (they’ve had a lifetime to do it). Below is data from the US Federal Reserve, tabulated by DYDQ:

Second, older adults are more likely to live alone. So, whether due to loneliness, or to the fact that they are solitary and unmonitored, they are more likely to give into their better judgement and trust strangers.

Third, and this is a point I emphasize all the time: advanced age is the single most predictive factor towards development of so-called major neurocognitive disorder - dementia. This can compound the issues noted above, where due to disinhibition and compromised memory, older adults are more easily manipulated by people who wish to take their money.

Fourth - older people, particularly the Boomers and older - belong to a particular “technology generation” where when they came of age, the primary technologies were electromechanical (think Boomers) or even just mechanical (think those born before the 1940s).

So they tend to have much more disposable income (which makes them bigger targets), they are more likely to live alone, they are often cognitively impaired, and they are overwhelmingly of a group where digital, mobile, and AI technologies are not their first or even second language.

AI Will Supercharge the Field of Elder Financial Abuse

Think about what AI can do.

Voice mimicry scams. Imagine your elderly mom or grandmother at home, and they get a call from someone sounds like their son who got into apparently a bunch of trouble and needs money sent to him via Venmo, ASAP!

Their son speaks in perhaps some slightly stilted fashion, but he looks and sounds like him. Of course granny will send money! AI's ability to impersonate voices and create content could lead to potential frauds like this - and in the not-so-near-future, these “deepfakes,” these simulations could even be interactive.

What about AI-Powered Romance Scams? Over the years, I’ve seen single older men over the years in my nursing home get quite interested in being online, specifically to frequent “dating sites,” and see a lot of them as being exceedingly vulnerable to scammers. AI will super-charge this. Scammers could use AI to create realistic profiles on dating websites, complete with convincing photos and life stories. They could then use these profiles to establish relationships with elderly individuals, eventually convincing them to send money or gifts. The AI can maintain the illusion of a real person by responding to messages and even engaging in real-time conversation in a realistic manner.

Advance-Fee Scams: This is a classic scam - where the victim is promised a large sum of money (like, a lottery winning, or inheritance) in return for a smaller upfront payment - but the promised money never arrives. The whole “Lads from Lagos” Nigerian scammer industry is based on this. AI could take all of this to a whole new level - and be used to create convincing emails or letters from fake lawyers, government officials, or lottery companies. These communications may include realistic-looking logos and signatures, and the language used may be highly convincing.

AI-Powered Phishing Scams: Obviously, we’re all familiar with these - where we get emails, often poorly written, where they try to get you to click on a link, which leads to a fake website where we’re asked to enter their financial information. AI can be used to create highly targeted, sophisticated phishing emails with polished grammar and spelling that appear to come from legitimate sources, such as banks or government agencies. These emails could be tailored by AI include personal details about the victim, making them seem more credible - yet could do this on a mass, automated scale. These will become extremely challenging for us younger, “normocognitive” people to deal with - but imagine a technology-naive older person, possibly struggling with early cognitive issues? Lethal.

How Do We Protect Older People (and ourselves) Against AI-powered Financial Scams?

This is the million-dollar question (for some people quite literally). I don’t have any great answers for this.

For the cognitively intact older adults on the younger side, like Baby Boomers (who, comparatively speaking, are doing OK with technology in the modern era, despite not being digital natives), simply making a point of engaging them in routine conversation, to provide education about the evolving nature of online scams, to encourage critical thinking, and to basically check in with them on a regular basis (regular communication!) about how they’re doing could be extremely helpful.

Technological fixes can help - although they aren’t a panacea. So, making sure your older adult loved one has spam filters working, that their phones have caller ID and are blocking unknown callers, and that their computers have up-to-date antivirus software seems to be pretty necessary.

Finally, it could be a matter of getting your older adult loved one to accept professional help, or more direct management of their finances by you. If you’re lucky, they will be comfortable with this.

Obviously, report scams when they happen to authorities - but recognize that due to the sheer volume of online scams, and the degree to which they can increasingly be automated, means they are unable to keep up. Best defense is to not get scammed in the first place.

In 2024 and beyond, this will be tricky indeed.

Large Language Model

I'm really glad you wrote this and I hope it gets shared around because I hadn't considered the angle before. Yes, it is frightening.

Now consider that mal-intentioned family members may use this very threat to seize power over finances and do the same type of damage. Like you said, there are no good answers but I agree that education is critical because stopping it will not be possible.

A few months ago, my mother in law (85) in a nursing home in PA was the victim of a bank fraud of more than 100K! Elder financial abuse is expected to boom even more. Please, Gero, may you ask AI to help you correct the use of the possessive pronoun its . It is =it's.

Its wake, its product, its issue, its mind, etc.... neutral, or you sexualise with her mind, his product Thank you VERY MUCH. u r the best